10 Strategic Considerations When Building Apps for ChatGPT

Rob Pisacane

Founder

Published Date

October 16, 2025

Introduction

With over 200 million weekly active users, ChatGPT has become the interface where millions of people start their workday, research purchases, and solve problems. For consumer-facing businesses, building custom GPTs or ChatGPT integrations represents a strategic opportunity to embed your product into these daily workflows.

But successful ChatGPT apps aren’t just technical integrations—they require careful consideration of user experience, business model implications, security architecture, and go-to-market strategy. This article outlines ten critical considerations for CPOs, CTOs, and investment directors evaluating ChatGPT app development.

1. Authentication Strategy: Balancing Friction and Security

The Challenge

ChatGPT apps can operate in three authentication modes: open (no auth required), API-key based, or OAuth. Your choice fundamentally shapes user experience and revenue potential.

Strategic Decision Points

Open authentication maximizes distribution but limits personalization and prevents revenue capture. API-key authentication works for developer tools but creates friction for consumer applications. OAuth provides the best user experience for authenticated features but requires users to connect accounts.

The critical question: at what point in the user journey do you require authentication? Leading implementations use a “progressive authentication” approach—allowing anonymous exploration with strategic prompts to authenticate when value is clear.

Implementation Considerations

For OAuth implementations, you’ll need to support the standard flow where ChatGPT redirects users to your authentication endpoint. The OpenAI platform expects specific response formats including authorization codes and token exchange endpoints.

Consider the failure scenarios: what happens when authentication expires mid-conversation? How do you handle users who refuse to authenticate? The smoothest implementations maintain conversational context while gracefully handling auth state changes.

Business Impact

Your authentication strategy directly affects conversion rates and user retention. Data from early ChatGPT commerce integrations shows that requiring authentication too early can reduce usage by 40-60%, but waiting too long means missing opportunities to capture user intent when it’s highest.

2. Actions Design: Building Tools That AI Can Actually Use

The Technical Foundation

ChatGPT apps extend functionality through “actions”—essentially API endpoints that the AI can call. These actions are defined using OpenAPI specifications, and the quality of your action design determines whether the AI can successfully help users accomplish tasks.

Critical Design Principles

The most effective actions are atomic, well-documented, and have clear success/failure states. Unlike traditional API design where you optimize for developer efficiency, ChatGPT actions must be optimized for AI comprehension.

This means verbose parameter descriptions, explicit examples of valid inputs, and detailed error messages that the AI can interpret and explain to users. An action description like “searches products” is insufficient—you need “Searches the product catalog by keyword, category, or filters. Returns up to 20 results with product ID, name, price, availability, and image URL. Use this when users want to browse or find specific products.”

Common Pitfalls

Many first-time implementations create actions that are too complex (requiring 8+ parameters), too ambiguous (unclear when to use one action versus another), or too numerous (dozens of overlapping actions confusing the AI about which to invoke).

The best implementations follow the principle of composability: simple actions that can be chained together rather than complex multi-purpose endpoints. For example, separate actions for “search products,” “get product details,” and “check availability” work better than one complex “product lookup” action.

Testing and Iteration

Action design requires extensive testing with real conversational flows. What seems obvious in API documentation often proves confusing when an AI attempts to use it. Budget time for iterative refinement based on actual usage patterns—expect to spend 30-40% of development time on action design and optimization.

3. Commerce Integration: From Discovery to Transaction

The Opportunity

OpenAI’s Agentic Commerce APIs enable direct purchasing within ChatGPT conversations—potentially the most significant shift in e-commerce user experience since mobile apps. Users can discover, research, and purchase products without leaving the conversation.

Technical Requirements

Full commerce integration requires implementing the checkout session management API (creating, updating, and completing sessions), delegated payment tokenization (enabling ChatGPT to facilitate payments without handling sensitive card data), and order lifecycle webhooks (keeping ChatGPT synchronized with fulfillment status).

The payment delegation API is particularly critical—it allows you to tokenize payment methods with spending allowances and expiry times, enabling frictionless purchases while maintaining PCI DSS compliance. Your system receives a vault token that can be charged within specified parameters.

Strategic Considerations

The business model implications are substantial. Traditional e-commerce relies on browsing-based discovery and multi-step checkout flows designed to maximize basket size. Conversational commerce collapses these steps, potentially reducing average order values but dramatically increasing conversion rates on purchase intent.

Early data from Shopify and Etsy integrations on ChatGPT suggests conversion rates 2-3x higher than traditional e-commerce, but with 15-20% lower average order values. The net effect on revenue depends entirely on your customer acquisition costs and lifetime value calculations.

Implementation Realities

Plan for 3-4 months of development for full commerce integration if building from scratch. You’ll need robust error handling (what happens when items go out of stock mid-conversation?), inventory synchronization, pricing and tax calculations that can respond to conversational context, and fraud prevention that works without traditional signals like browsing behavior.

4. Data Privacy Architecture: What AI Sees and Remembers

The Fundamental Question

Every message users send through your ChatGPT app passes through OpenAI’s infrastructure. What data are you comfortable having in that pipeline? What can’t legally or ethically leave your infrastructure?

Architecture Patterns

The most privacy-conscious implementations use a “thin data” approach—ChatGPT receives only the minimum information needed to facilitate the conversation, with sensitive data remaining in your systems and referenced by opaque identifiers.

For example, rather than sending full customer profiles to ChatGPT, send customer IDs. When ChatGPT needs to reference order history, your action returns summarized, non-sensitive order data. Financial information, health data, and personally identifiable information should generally stay within your infrastructure.

Regulatory Compliance

GDPR, CCPA, and sector-specific regulations (HIPAA, GLBA, PCI DSS) all have implications for ChatGPT integrations. The critical questions: What constitutes user consent for AI processing? How do you honor data deletion requests when conversation history lives in OpenAI’s systems? What audit trails do you need?

Leading implementations treat the ChatGPT integration boundary as a trust boundary, implementing the same data controls you’d apply to any third-party service. This means data classification, encryption in transit, and explicit logging of what data crosses the boundary.

OpenAI’s Commitments

OpenAI provides several important guarantees for enterprise customers: data used in ChatGPT API calls is not used to train models (unless explicitly opted in), conversations can be excluded from OpenAI’s data retention, and enterprise agreements include specific data protection clauses. However, your legal and compliance teams need to validate these commitments against your specific requirements.

5. Conversational UX: Designing for Natural Language Interaction

The Paradigm Shift

Traditional product design focuses on visual interfaces, clearly defined flows, and explicit user actions. Conversational interfaces invert these assumptions—there’s no guaranteed flow, users express intent in unpredictable ways, and context must be inferred rather than explicitly provided.

Design Principles for Conversational Products

The most successful ChatGPT integrations embrace ambiguity rather than fighting it. Instead of trying to force users into predefined flows, they support open-ended exploration with strategic guardrails.

This means building actions that can handle partial information (“I want running shoes” without specifying size, color, or budget), gracefully recover from misunderstandings (offering clarifying questions rather than errors), and maintain context across multi-turn conversations (remembering what was discussed three exchanges ago).

Setting User Expectations

A critical early decision: what persona should your ChatGPT app embody? Helpful assistant? Expert consultant? Enthusiastic advocate? The personality you choose shapes user expectations about capabilities, tone, and appropriate use cases.

Document your capabilities clearly in the app description, but more importantly, design conversational flows that naturally communicate boundaries. For example, if your app can’t process returns, the conversation should proactively mention “I can help you find products and complete purchases, but for returns you’ll need to visit [your website].”

Handling Edge Cases

Users will attempt tasks your app wasn’t designed for. They’ll ask for capabilities you don’t have. They’ll provide information in formats you didn’t anticipate. Budget significant time for edge case handling—testing with real users typically reveals 3-4x more edge cases than internal testing.

The smoothest implementations use progressive disclosure, starting with simple, high-confidence responses and offering more detail or options as conversations progress. They also explicitly communicate uncertainty (“I’m not entirely sure what you mean—did you want…?”) rather than guessing incorrectly.

6. Discovery Strategy: Standing Out in the GPT Store

The Marketplace Reality

The GPT Store contains thousands of custom GPTs competing for attention. Unlike traditional app stores where visual design and screenshots drive discovery, GPT Store success depends primarily on clear value propositions, effective keyword optimization, and initial user ratings.

Optimization Tactics

Your GPT’s name and description are critical for discovery. They need to be immediately comprehensible (users should understand your value proposition in 5 seconds), keyword-rich (what terms would users search for?), and differentiated (why choose your GPT over alternatives?).

The most successful GPTs in consumer categories (shopping, travel, productivity) follow a pattern: [Action] + [Domain]—“Find and Book Flights,” “Shop Sustainable Fashion,” “Plan Healthy Meals.” Avoid clever names that obscure functionality.

Launch Strategy

Unlike mobile apps where “soft launch” strategies are common, GPT distribution tends to be binary—either users find and engage with your GPT, or they don’t. This means having a comprehensive launch plan including promotion to your existing user base, integration with your main product, and strategic placement in relevant communities.

Consider the activation loop: how do users discover your GPT is relevant to their current need? The most effective strategy is contextual promotion—when users are researching products on your website, suggest “Continue this conversation in ChatGPT with our shopping assistant.”

Measuring Success

Traditional app store metrics (downloads, ratings) apply, but ChatGPT-specific metrics matter more: conversation completion rates (what percentage of users who start a conversation accomplish their goal?), conversation length (are users engaged or frustrated?), and action success rates (do your API calls work reliably?).

OpenAI provides analytics on these metrics, but instrumenting your own tracking is essential for understanding actual user behavior and business outcomes.

7. Cost Architecture: Managing AI Usage Economics

The Cost Structure

Unlike traditional software where marginal costs approach zero, AI-powered applications have real per-interaction costs: OpenAI API charges for GPT model usage, your infrastructure costs for serving actions, and data transfer costs for API calls.

For a conversational commerce application, expect costs of $0.03-$0.08 per conversation with moderate action usage. This seems trivial until you’re processing hundreds of thousands of conversations monthly—suddenly AI costs represent 10-15% of customer acquisition costs.

Optimization Strategies

The most effective cost optimization focuses on three areas: reducing unnecessary action calls (ensuring ChatGPT only invokes actions when truly necessary), implementing response caching (storing and reusing responses for common queries), and right-sizing action responses (returning only data the AI actually needs rather than full database dumps).

Token management is particularly important—verbose action descriptions and responses consume tokens quickly. The best implementations use concise descriptions with external documentation for humans, and return structured data rather than natural language from actions (ChatGPT can format structured data for users, but you pay for every token in the response).

Business Model Implications

Cost structure directly affects pricing strategy. If your traditional product has high gross margins (70-80%), absorbing AI costs in the base price makes sense. If you’re operating on lower margins (30-40%), you may need to reserve AI features for premium tiers or charge directly for usage.

Consider the customer lifetime value calculation: if ChatGPT integration reduces acquisition costs by 30% (users convert faster) but increases per-user costs by 5%, the net effect is strongly positive. Model these economics explicitly before committing to specific features.

Monitoring and Alerts

Implement real-time cost monitoring with alerts for anomalous usage. A bug that causes infinite action loops can consume thousands of dollars in API calls within hours. Leading implementations track cost per conversation, cost per user, and cost per completed transaction, with automated alerts when metrics exceed thresholds.

8. Error Handling: Graceful Degradation in Conversation

The Failure Modes

Conversational applications introduce unique failure scenarios: actions can timeout mid-conversation, APIs can return unexpected errors, third-party services can become unavailable, and users can provide information in formats your system can’t process.

Unlike traditional applications where error states can be explicitly designed and tested, conversational errors emerge organically from the infinite combination of possible user inputs and system states.

Design Principles

The cardinal rule: errors should never break the conversation. Even when your backend is completely unavailable, the GPT should be able to communicate the situation, suggest alternatives, and maintain conversational context.

This requires designing actions with explicit error responses that the AI can interpret and communicate naturally. Instead of HTTP 500 errors, return structured error objects: {"error": "inventory_check_unavailable", "message": "I'm having trouble checking current inventory. You can view availability on our website at [link] or I can help you browse other products.", "fallback_actions": ["search_products", "browse_categories"]}.

Retry Logic and Idempotency

Actions need idempotency guarantees—if ChatGPT retries a failed action, it shouldn’t create duplicate orders or apply discounts twice. Include idempotency keys in action requests and implement deduplication in your infrastructure.

For long-running actions (inventory checks, shipping calculations), implement timeout handling that provides partial results or alternative paths rather than leaving users waiting indefinitely.

User Communication

The smoothest implementations treat errors as opportunities for alternative value delivery. If product search fails, offer category browsing. If checkout is unavailable, provide a direct link to complete the purchase on your website. If authentication expires, explain the situation and offer re-authentication without losing conversation context.

9. Analytics and Learning: Understanding Conversational User Behavior

The Measurement Challenge

Traditional product analytics (page views, click paths, session duration) don’t map cleanly to conversational interfaces. A “successful” conversation might be 2 messages or 20. A user might abandon a conversation because they got their answer or because they were frustrated.

Key Metrics Framework

Effective ChatGPT app analytics focus on three categories: engagement metrics (conversation starts, average conversation length, return user rate), completion metrics (percentage of conversations where users accomplish apparent goals, action success rates, checkout completion), and business metrics (revenue per conversation, customer acquisition cost, lifetime value of ChatGPT-sourced customers).

The most sophisticated implementations use conversation analysis to classify user intent and outcomes: “product research,” “immediate purchase,” “customer support,” “abandoned due to unavailable product,” “abandoned due to confusion,” etc. This qualitative categorization reveals optimization opportunities that quantitative metrics miss.

Continuous Improvement

Unlike traditional products where A/B testing is standard practice, testing conversational experiences is more complex. You can’t simply show two different versions and measure outcomes—conversations are inherently unique and context-dependent.

Instead, focus on iterative improvement based on failure analysis. Review conversations where users abandoned, where actions failed, or where users explicitly expressed frustration. These failure modes reveal where your app’s conversational design, action architecture, or business logic need refinement.

Privacy-Preserving Analytics

Remember that conversation transcripts contain user data. Your analytics implementation needs appropriate data handling: anonymization of personally identifiable information, secure storage with appropriate access controls, and explicit data retention policies.

Leading implementations separate quantitative metrics (stored indefinitely, fully anonymized) from qualitative conversation analysis (sample-based, time-limited retention, access-controlled).

10. Platform Evolution: Building for a Moving Target

The Reality

OpenAI ships new GPT model versions every 3-6 months, updates the actions API regularly, and introduces new capabilities (like Agentic Commerce) without extensive preview periods. Your ChatGPT app needs architecture that accommodates rapid platform evolution.

Version Management Strategy

The most resilient implementations avoid tight coupling to specific model versions or API features. This means designing actions with backwards compatibility (new parameters are optional, deprecated parameters degrade gracefully), implementing feature detection rather than version detection, and maintaining fallback implementations when newer features aren’t available.

For example, the recent introduction of async function calling in the Realtime API represents a significant capability improvement—but your app should work acceptably without it, taking advantage when available rather than requiring it.

Monitoring for Breaking Changes

Unlike traditional platform providers who offer extensive preview periods and detailed migration guides, OpenAI sometimes introduces changes with limited notice. Implement automated monitoring that detects when action success rates decline, conversation patterns shift, or error rates spike—these often indicate upstream platform changes before official announcements.

Migration Budgeting

Allocate 10-15% of ongoing development capacity to platform maintenance and migration. This seems conservative, but the pace of AI platform evolution makes it realistic. Teams that don’t budget for continuous migration find themselves in crisis mode with each platform update.

Staying Informed

The official OpenAI developer blog, the GPT changelog, and community forums like the OpenAI Developer Forum provide early signals about platform direction. More importantly, maintain relationships with OpenAI’s partnership and developer relations teams—larger integrations often get advance notice of significant changes.

Strategic Decision Framework: Should You Build a ChatGPT App?

After considering these ten dimensions, the fundamental question remains: is building a ChatGPT app the right strategic move for your business?

Strong Indicators to Proceed

You should seriously consider ChatGPT integration if: your product benefits from conversational interaction (shopping, planning, problem-solving), your target customers are already ChatGPT users (knowledge workers, tech-savvy consumers, students), you can deliver unique value in a conversational context (personalization, expert guidance, complex configuration), and you have technical capacity for the 4-6 month initial build plus ongoing maintenance.

Reasons to Pause

Delay ChatGPT integration if: your product requires rich visual interaction that conversation can’t replicate, your target customers don’t use AI assistants regularly, you’re unable to invest in ongoing platform maintenance, or you have fundamental data privacy constraints that preclude third-party AI processing.

The Hybrid Approach

Many successful implementations start with a minimal ChatGPT app that handles one high-value use case (product search, appointment booking, initial consultation) and gradually expand capabilities based on user engagement and business impact. This “start small, scale based on results” approach reduces risk while maintaining strategic optionality.

Conclusion: Conversational Commerce as Strategic Capability

Building apps for ChatGPT isn’t just about creating another distribution channel—it’s about developing organizational capability in conversational product design, AI integration architecture, and natural language user experience.

The businesses that invest in this capability now, while the platform is still relatively early-stage, will have significant advantages as conversational interfaces become standard expectations. The considerations outlined here represent the foundation for that capability development.

The most successful implementations treat ChatGPT integration as a product discipline requiring dedicated resources, clear success metrics, and executive sponsorship. They invest in understanding conversational user behavior, build infrastructure for rapid iteration, and develop organizational expertise in AI-mediated customer interactions.

For CPOs, CTOs, and investment directors evaluating ChatGPT strategy, the question isn’t whether conversational interfaces will matter—they clearly will. The question is whether your organization will lead in this space or follow after the patterns are established. These ten considerations provide the framework for making that decision strategically rather than reactively.

Rob Pisacane works with mid-market consumer businesses to identify and prototype AI-powered solutions that drive measurable product-led growth. If you’re exploring ChatGPT integration strategy for your business, reach out to discuss how these considerations apply to your specific context.

What we've worked on lately.

View All

We Built an AI Research Tool in a Month

We built Another Flock's AI research platform in just one month—from initial concept to live product. It gives teams instant synthetic user feedback on their ideas, designs, and marketing materials.

Using AI to Boost Online Impulse Buys

We worked with a global consumer goods company to tackle a big problem: people don't impulse-buy online like they do in physical stores. Together, we identified and prototyped AI solutions that suggest smart product combinations to shoppers, helping boost those spontaneous purchases.

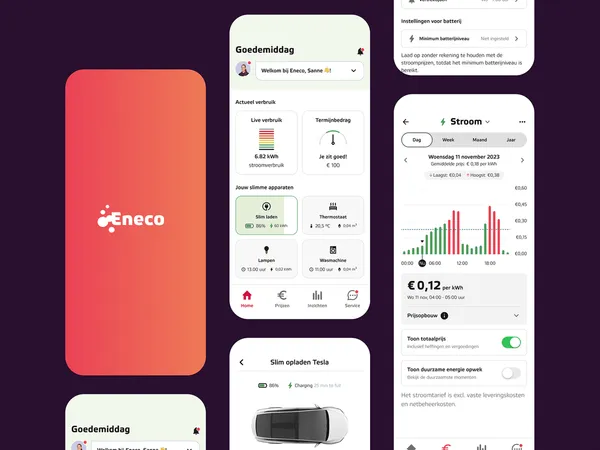

Launching a New Energy Pricing Model

We helped Eneco launch one of the Netherlands' first major dynamic energy tariffs in September 2023. Through user research, rapid prototyping, and brand work, we created a proposition that attracted environmentally conscious customers looking for more control over their energy costs.

"Nesta worked with The Product Bridge throughout 2024 to build and scale our Visit a Heat Pump service, transforming it from a proof of concept into a live, nationwide offering."

Alasdair Hiscock

Design Lead, Nesta